Reframing 2: Hidden Influence

Following my last post, I have been working to create a greater sense of interactivity in my Ableton Live sets by reducing the number of finely-controlled parameters in favour of fewer pedals and the introduction of one-to-many mappings. This allows me to build a meaningful relationship with the system in performance (Emmerson, 2013).

This Ableton set has two main signal chains. The first, visible at the bottom of the above screenshot, is a chain of processing applied to the guitar input. This makes use of the Max for Live Spectral Delay device to process a loop into a hazy ambient texture. I have included a stereo tremolo effect at the start of the chain to give the loop some spatial interest, and added compression and EQ (out of shot) for practical reasons: to regulate volume and subdue cumulative unwanted frequencies.

One of my strategies for increasing interactivity in Ableton was to include generative processes or playback in my Live sets. This not only introduces the possibility of an unlimited sound palette, but also creates a dialogue between the live sound and ‘something that runs’ (Butler, 2014).

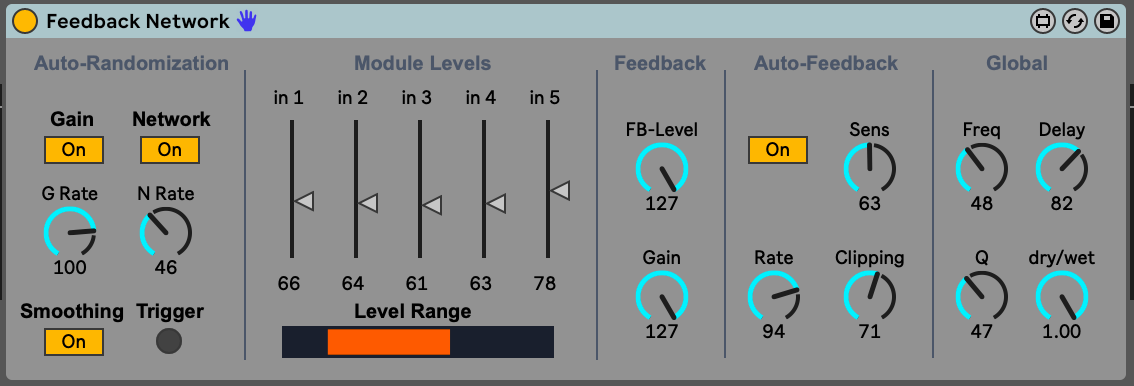

To achieve this here, I used another Max for Live device – the Feedback Network. This contains five independent ‘feedback units’, each with a bandpass filter and delay line. These units can then be fed into each other to create chaotic and unpredictable patterns. In this set, the device receives a delayed feed of the unprocessed guitar sound, and once activated, constantly outputs a shifting web of feedback across a wide frequency range, which is perfectly satisfying to listen to by itself. I pass the signal again into a compressor and limiter to tame any volume peaks, and then a delay and the SoundHack granulation plugin ‘bubbler’. Dry/wet settings for both of these devices are set to around 30% to give the feedback an added depth, and the volume of the Looper in the guitar chain is sidechained to the feedback to create some space in the mix.

Control

The most common way to control software MIDI parameters with hardware controllers is by using one-to-one mappings, where an input directly affects an output (for example a fader controlling the volume of a single audio signal). I am interested however in one-to-many (or divergent) mappings, where one control input may affect a number of parameters. More complex mappings like this, that produce sonically richer (and perhaps more unpredictable) changes are more likely to create meaningful felt responses between performer and system, which communicate musical expression and meaning to the audience (Emmerson, 2013).

The first iteration of this system (a recording of which is at the start of this post) involved very simple mappings with an iRig Blueboard connected to two expression pedals. The buttons on the BlueBoard itself control the record/play/overdub/clear functions in the Looper device, and the first expression pedal controls the volume of the Feedback chain. The second expression pedal is mapped to the following parameters:

· A send of the unprocessed guitar signal into a Grain Delay

· The spray, pitch and randomisation of this Grain Delay

· An increase in the randomisation in the Feedback Network, and a send of this signal chain to a further delay line with noise injection

· The tempo (and therefore pitch) of the recorded loop

Sonically, these changes make for a subtle, but clear and apparent increase in the intensity of the piece once I start manipulating the second expression pedal at 06.27.

The mappings here are linear: when I operate the pedal from its minimum to maximum positions, the control numbers for the parameters above increase accordingly. There is a small amount of scaling of this control data (setting minimum and maximum thresholds) within Ableton, but little inverting and no way of randomising it.

It is my thinking that a system with one-to-many mappings invites exploration by way of musical engagement much more than a system that uses one-to-one mappings. With the latter, the performer might place more importance on fully understanding the mechanics of the interactions and will need to spend more time internalising the assemblage and incorporating controllers into their body schema (Riva and Mantovani, 2012, p. 207). The more playful and accessible approach afforded by a one-to-many system delivers musical responses faster, which (clearly) communicate more immediately to audiences.

Future posts will detail further iterations of this system. I plan to introduce more unpredictability by using Max for Live devices like StrangeMod, which output random control data. There is also scope to experiment with other controllers.

References

Butler, M.J. (2014) Playing with Something That Runs: Technology, Improvisation, and Composition In DJ and Laptop Performance. Oxford: Oxford University Press.

Emmerson, S. (2013) ‘Rebalancing the discussion on interactivity’, in Electroacoustic Music Studies Network Conference. Electroacoustic Music in the Context of Interactive Approaches and Networks, Lisbon.

Riva, G. and Mantovani, F. (2012) ‘From the body to the tools and back: A general framework for presence in mediated interactions’, Interacting with Computers, 24, pp. 203–210. Available at: http://dx.doi.org/10.1016/j.intcom.2012.04.007.